It would be easy to design a similar experiment that would test subjects’ tolerance of interfering noise in both the audio and visual components of this. Objects can range anywhere from transparent to opaque. In addition to describing the color of an object by determining its constituent red, green, and blue components, GEM uses a fourth variable, α, that describes the translucence of an object. The visual cues are also given in both wide and minimum threshold spacings. Additionally, they are asked to recall melodies that are constructed of intervals that are relatively widely spaced, as well as melodies constructed using the subject’s individual minimum threshold interval. In the second part of the experiment, subjects are asked to recall melodies with and without visual cues. Colors are shown in a simple box, with a gradient showing the range of colors displayed next to the box. The subject is then asked to follow a similar pattern in distinguishing between colors. The pitches are played in pairs in various proximities in frequency to each other until the subject is unable to correctly distinguish between the pitches. In the first part of this experiment, the subject is asked if they are able to distinguish between two successive sine tones, based on pitch height (frequency). Additionally, this instrument should give some indication of whether melodies based on the smallest interval that the subject can distinguish are more difficult to remember. Here we demonstrate a test instrument designed to determine if visual cues aid in memory of melodic patterns. This allows for the easy integration of aural and visual stimuli into a test instrument. GEM also uses a visual programming language, and can operate within the Pd environment, processing video and images in realtime, and manipulating polygonal graphics. Pd is available for the SGI IRIX and Windows/NT, and support for the integration of graphics with sound has been added in the form of the GEM. Pd continues and updates Max’s visual programming paradigm.

The subject never has to interact with the program other than through the GUI. The results of the test may then be recovered as text. Additionally, subjects finalize their response, return to a previous test, advance to the next test, or end the testing session. The subject may ask to hear the tone they are being asked to match, hear the tone that results from their slider settings, and change that tone in realtime as they listen and move the sliders. The sliders correspond to the carrier frequency and index of the FM tone produced.

#SUPERCOLLIDER GUI TRIAL#

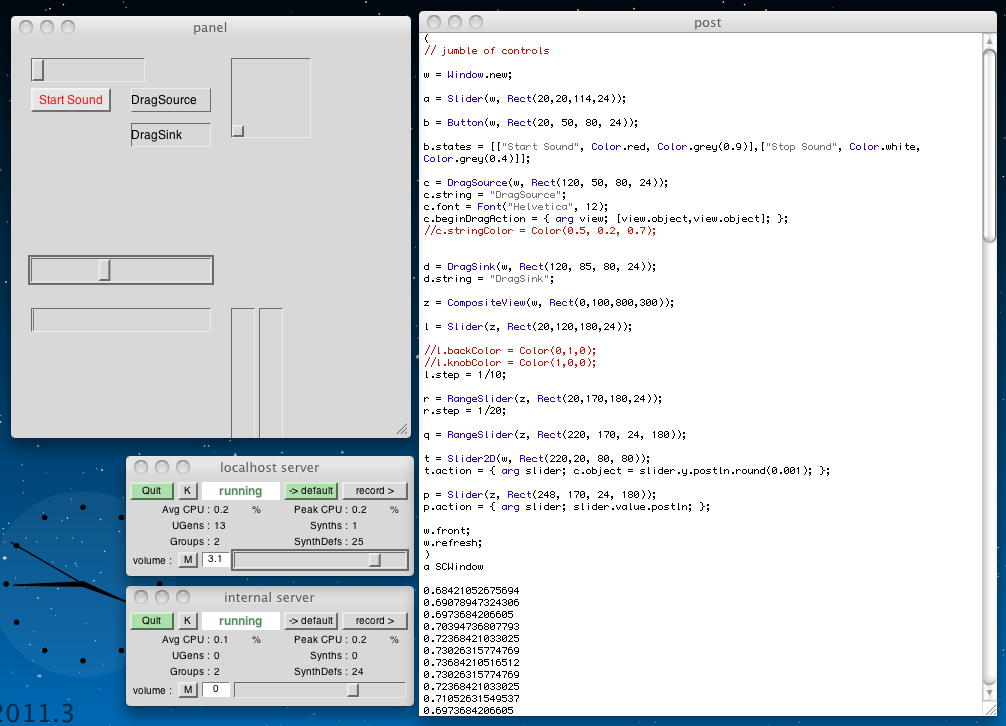

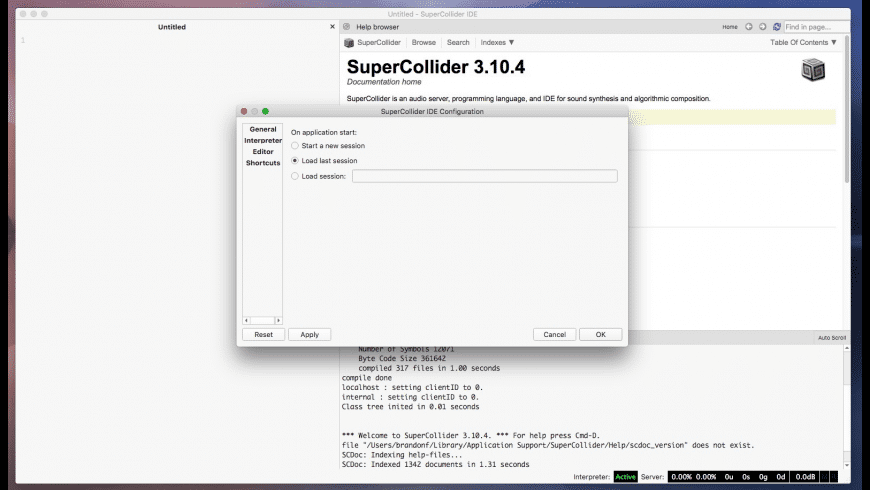

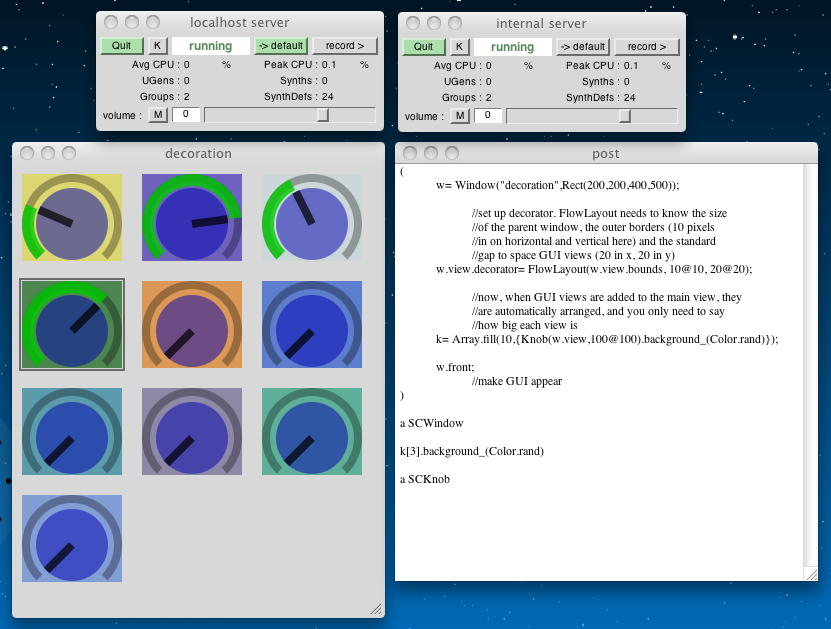

In our trial FM matching experiment using SuperCollider, the subject is asked to manipulate two sliders to match the pitch and timbre of a frequency-modulated test tone ( Figure 2). SuperCollider allows for the programming of synthesized instruments in a higher-level language than has been widely available previously (McCartney 1996). This makes it very simple to not only have the synthesis occur in realtime, but also to base that synthesis on a subject’s interaction with the instrument. However, SuperCollider implements an easily configurable graphical user interface that has intuitive controls such as buttons and sliders that can be assigned to any parameter of the synthesis. Its syntax is borrowed from the commonly-used programming languages SmallTalk and C, and may initially be more difficult to master for a researcher with little programming background, as compared to a programming environment that is completely graphical.

While Max and Pd both use a graphical programming environment, SuperCollider uses a more traditional, but very powerful text-based programming paradigm, and is designed to run only on the Macintosh. These files are accessed in random order during the course of the experiment. Also, all of the stimuli were recordings of an acoustic violin stored as soundfiles on the hard disk.

Max allowed for an intuitive user interface, which has visual feedback for responses entered by selecting one of four choices from the keyboard ( Figure 1). In the second part of the experiment, the order is reversed, with subjects hearing either a straight or vibrato tone for the second pitch.

The subjects are asked to determine if the second pitch is higher or lower than the first pitch. In this experiment, the subject is first presented with either a straight pitch or a vibrato pitch, followed by a straight pitch. Our example of an instrument in MSP tests the affect of vibrato on subjects’ ability to quickly determine pitch (Yoo et al. The MSP externals allow a user to influence the synthesized sound in realtime. create complex designs without knowing any programming language.

0 kommentar(er)

0 kommentar(er)